In the world of modern technology, our conception of what a computer is capable of achieving continues to expand at an unprecedented rate of speed. As powerful hardware becomes more lightweight, portable and affordable than ever before, our computing devices are evolving rapidly. Although our expectations of what a computer is capable of may be changing, the central hardware powering these complex operations is not. A computer motherboard is one of the most integral elements of the modern computer, and it has served as the focal point of operations since the days of the first desktop computers. Understanding the motherboard function can help you gain a better idea of how computers work and how all the various technology "under the hood" works together.

The Function of Motherboard Hardware

Video of the Day

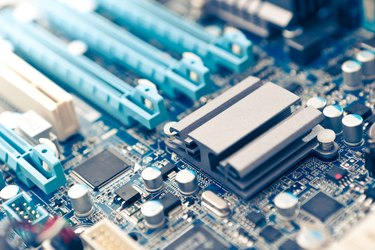

The motherboard, also referred to as the main circuit board, is considered the home base of many important computer hardware elements, including the central processing unit (CPU), memory, sound cards and other peripheral items. During use, the motherboard establishes vital electronic connections between these various hardware units to ensure that tasks undertaken by the user are completed successfully and efficiently. The process of managing all these connections in real time is demanding on the motherboard. Because of this, cooling fans are often attached to motherboards to help control the temperature and ensure that all hardware remains protected from heat damage.

Video of the Day

Exploring Modern Motherboards

One of the most important hardware items attached to the motherboard is the CPU. Typically, motherboards feature a small socket connector somewhere centrally located on the hardware. This socket is intended solely for the computer's CPU. Although much of the additional hardware connected to the motherboard is critical for a computer to function properly, the lack of a CPU renders the computer unable to function at all.

An often overshadowed element of the motherboard that plays a huge role in maintaining successful functionality is the power connector. The power connector on the motherboard usually features 20 or 24 pins and directly connects to the connector on the computer's primary power supply. Once an electrical connection is established between the power supply and the motherboard, the motherboard distributes additional power to all the peripheral hardware connected to it. With that in mind, it can be argued that the motherboard is not only the central hub of information distribution within the computer but also of electrical distribution.

Although motherboards deliver a staggering amount of functionality and power to modern computer users, they can also be obtained at affordable price points. Virtually all major technology suppliers often motherboards for sale that allow individuals to build their own computers on a budget.